-

Jackie Kennedy ignored Maria Callas’ affair with husband Aristotle Onassis: pal - 10 mins ago

-

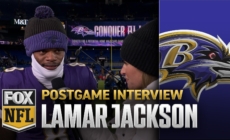

Lamar Jackson on Ravens' win over Steelers and clinching the playoffs – 'It feels good' | NFL on FOX - 14 mins ago

-

Yankees Likely To Sign Alex Bregman Following Paul Goldschmidt Deal - 37 mins ago

-

The Circle of Light Closes and Illuminates the World - 56 mins ago

-

Texas, fueled by adversity and last year’s CFP loss, tops Clemson in playoff opener - 59 mins ago

-

College Football Playoff: Texas Eliminates Clemson, Will Play Arizona State in Peach Bowl - about 1 hour ago

-

Juju Watkins drills a 3-pointer over Paige Bueckers, extending USC’s lead over UConn - 2 hours ago

-

How To Get Your Steps in Over the Holidays, According to Personal Trainers - 2 hours ago

-

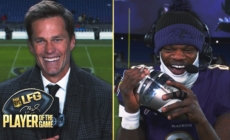

Tom Brady's LFG Player of the Game: Ravens' Lamar Jackson | Week 16 DIGITAL EXCLUSIVE - 2 hours ago

-

Alpha Prime Racing Confirms Huge Crew Chief Signing For NASCAR Xfinity Series - 3 hours ago

Elon Musk joins hundreds calling for a six-month pause on AI development in an open letter

Billionaire Elon Musk, Apple co-founder Steve Wozniak and former presidential candidate Andrew Yang joined hundreds calling for a six-month pause on AI experiments in an open letter — or we could face “profound risks to society and humanity.”

“Contemporary AI systems are now becoming human-competitive at general tasks,” reads the open letter, posted on the website of Future of Life Institute, a non-profit. “Should we develop nonhuman minds that might eventually outnumber, outsmart, obsolete and replace us?”

The futurist collective, backed by Musk, previously stated in a 2015 open letter that they supported development of AI to benefit society, but were wary of the potential dangers.

The latest open letter, signed by 1,124 people as of Wednesday afternoon, points to OpenAI’s GPT-4 as a warning sign. The current version, the company boasts, is more accurate, human-like, and has the ability to analyze and respond to images. It even passed a simulated bar exam.

“At some point, it may be important to get independent review before starting to train future systems, and for the most advanced efforts to agree to limit the rate of growth of compute used for creating new models,” a recent post from OpenAI states.

“We agree. That point is now,” the futurists write. “This does not mean a pause on AI development in general, merely a stepping back.”

It may seem at odds with the billionaire’s own artificial intelligence efforts.

“I’m a little worried about the AI stuff,” Musk said from a stage earlier this month — surrounded by Tesla executives, reports Reuters.

But researchers that have spoken to CBS News said the same — albeit a little more directly.

“I think that we should be really terrified of this whole thing,” Timnit Gebru, an AI researcher, told CBS Sunday Morning earlier this year.

Some are worried that people will use ChatGPT to flood social media with phony articles that sound professional, or bury Congress with “grassroots” letters that sound authentic.

“We should understand the harms before we proliferate something everywhere, and mitigate those risks before we put something like this out there,” Gebru told Sunday Morning.

The proposed pause should be looked on as a way to make AI development “more accurate, safe, interpretable, transparent, robust, aligned, trustworthy, and loyal,” while working alongside lawmakers to create AI governance systems.

“Society has hit pause on other technologies with potentially catastrophic effects on society. We can do so here,” the letter reads. “Let’s enjoy a long AI summer, not rush unprepared into a fall.”

Source link