-

The Circle of Light Closes and Illuminates the World - 26 mins ago

-

Texas, fueled by adversity and last year’s CFP loss, tops Clemson in playoff opener - 30 mins ago

-

College Football Playoff: Texas Eliminates Clemson, Will Play Arizona State in Peach Bowl - 49 mins ago

-

Juju Watkins drills a 3-pointer over Paige Bueckers, extending USC’s lead over UConn - about 1 hour ago

-

How To Get Your Steps in Over the Holidays, According to Personal Trainers - about 1 hour ago

-

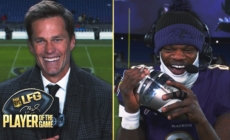

Tom Brady's LFG Player of the Game: Ravens' Lamar Jackson | Week 16 DIGITAL EXCLUSIVE - 2 hours ago

-

Alpha Prime Racing Confirms Huge Crew Chief Signing For NASCAR Xfinity Series - 2 hours ago

-

2 U.S. Navy pilots eject to safety after friendly fire downs their fighter jet - 2 hours ago

-

JuJu Watkins and No. 7 USC hold off Paige Bueckers and fourth-ranked UConn 72-70 - 3 hours ago

-

Today’s ‘Wordle’ #1,282 Answers, Hints and Clues for Sunday, December 22 - 3 hours ago

New laws close gap in California on deepfake child pornography

Using an AI-powered app to create fake nude pictures of people without their consent violates all sorts of norms, especially when those people are minors.

It would not, however, violate California law — yet. But soon it will.

A pair of bills newly signed by Gov. Gavin Newsom outlaw the creation, possession and distribution of sexually charged images of minors even when they’re created with computers, not cameras. The measures take effect Jan. 1.

The expansion of state prohibitions comes as students are increasingly being victimized by apps that use artificial intelligence either to take a photo of a fully clothed real person and digitally generate a nude body (“undresser” apps) or seamlessly superimpose the image of a person’s face onto a nude body from a pornographic video.

According to a survey released last month by the Center for Democracy & Technology, 40% of the students polled said they had heard about some kind of deepfake imagery being shared at their school. Of that group, nearly 38% said the images were nonconsensual and intimate or sexually explicit.

Very few teachers polled said their schools had steps in place to slow the spread of nonconsensual deepfakes, the center’s report said, adding, “This unfortunately leaves many students and parents in the dark and seeking answers from schools that are ill-equipped to provide them.”

What schools have tended to do, according to the center, is respond with expulsions or other penalties for the students who create and spread the deepfakes. For example, the Beverly Hills school district expelled five eighth-graders who shared fake nudes of 16 other eighth-graders in February.

The Beverly Hills case was referred to police, but legal experts said at the time that a gap in state law appeared to leave computer-generated child sexual abuse material out of state prosecutors’ reach — a situation that would apply even if the images are being created and distributed by adults.

The gap stems in part from the state’s legal definition of child pornography, which did not mention computer-generated images. A state appeals court ruled in 2011 that, to violate California law, “it would appear that a real child must have been used in production and actually engaged in or simulated the sexual conduct depicted.”

Assembly Bill 1831, authored by Assemblymember Marc Berman (D-Menlo Park), expands the state’s child-porn prohibition to material that “contains a digitally altered or artificial-intelligence-generated depiction [of] what appears to be a person under 18 years of age” engaging in or simulating sexual conduct. State law defines sexual conduct not just as sexual acts, but graphic displays of nude bodies or bodily functions for the purpose of sexual stimulation.

Once AB 1831 goes into effect next year, AI-generated and digitally altered material will join other types of obscene child pornography in being illegal to knowingly possess, sell to adults or distribute to minors. It’s also illegal to be involved in any way in the noncommercial distribution or exchange of such goods to adults knowing that they involve child pornography, even if they’re not obscene.

Senate Bill 1381, authored by Sen. Aisha Wahab (D-Hayward), covers similar ground, amending state law to clearly prohibit using AI to create images of real children engaged in sexual conduct, or using children as models for digitally altered or AI-generated child pornography.

Ventura County resident and former Disney actress Kaylin Hayman, 16, was a vocal advocate for AB 1831, having experienced the problem firsthand. According to the Ventura County district attorney’s office, a Pennsylvania man created images that spliced her face onto sexually explicit bodies — images that were not punishable at the time under California law. Instead, the man was prosecuted in federal court, convicted and sentenced to 14 years in prison, the D.A.’s office said.

“Advocating for this bill has been extremely empowering, and I am grateful to the DA’s office as well as my parents for supporting me through this process,” Hayman said in a news release. “This law will be revolutionary, and justice will be served to future victims.”

“Through our work with Kaylin Hayman, who courageously shared her experience as a victim, we were able to expose the real-life evils of computer-generated images of child sexual abuse,” Dist. Atty. Erik Nasarenko said in the release. “Kaylin’s strength and determination to advocate for this bill will protect minors in the future, and her efforts played a pivotal role in enacting this legislation.”

Source link